.webp)

Artificial Intelligence (AI) is changing Business Intelligence (BI). Companies have always turned to our reports and dashboards for answers to the most important business questions. But as our business stakeholders become used to conversational experiences with chatbots like ChatGPT and Claude.ai, they will expect the ability to simply chat with the data already available within the business.

Data Agents empower executives and team members to interact with data as if they were speaking to a knowledgeable data analyst, already familiar with your company's context. But is it really achievable for your business? The answer is yes, it is within reach for every business today, it just takes some planning to put the foundational building blocks in place.

Here are 5 steps to get you there. Each step takes effort, but every company can start to make progress on one or more of them today.

.webp)

This step is all about establishing your ROI for the effort of building a Conversational BI capability.

As we approach designing a new dashboard for our clients, we always ask two vital questions to ensure a successful outcome:

These questions establish success factors for the dashboard, and keep everyone focused on the most important messages that the visuals should convey. The result is a cleaner, more useful dashboard.

Conversational BI should also focus on Answers & Actions. However, because it is powered by AI, we face a big difference from traditional BI: it's impossible to build a system that will answer all questions with perfect accuracy every time. How can we compensate for the predictive nature of LLMs, while still building a reliable tool?

The solution is to build traditional BI content, like a dashboard, that we know deterministically answers the question correctly every time. This give people (and AI agents) a source of truth to check against. In Power BI, the semantic model that comes with this dashboard will dramatically increase the accuracy of the Data Agent, because it is rich with metadata.

Ask your stakeholders what their most frequent and critical questions are about the business. These might include:

Once you have the questions, ask them, "what will you do with this information?". For instance, if a sales leader asks about top-selling products, the logical next action might be to analyze inventory levels or launch a targeted marketing campaign for those items. Connecting questions to actions ensures that your Data Agent will follow up with valuable prompts. It makes it a more valuable agentic participant, whether interacting with people in business processes or other AI agents in automated workflows.

This foundational step aligns your technology investment with clear, measurable business outcomes and sets the stage for measuring ROI.

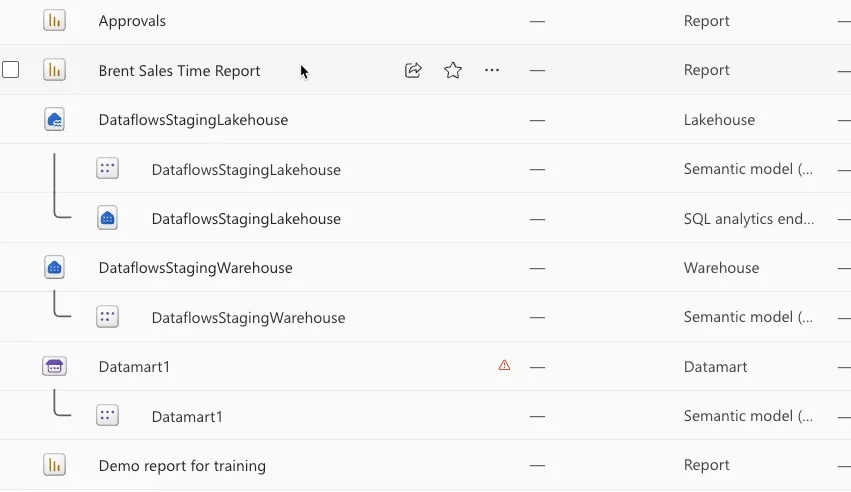

With your answers and actions defined, the next step is to prepare your data infrastructure. A robust data foundation ensures that the answers provided by your Data Agent are as accurate, consistent, and secure as possible. This phase focuses on creating the source of truth for your agent.

The right data foundation will vary for each company's situation. In Microsoft Fabric, it looks like a Warehouse or Lakehouse that has clean and well-modeled data. That maybe in a fully-fledged medallion architecture, or may be as simple as a few database tables.

This involves several key activities:

A solid data foundation will make your Conversational BI capability robust and stable. It will also open up more possibilities for your business, like trend reporting and self-service analytics. We want to make sure we are providing solid data to deliver trustworthy insights, dependably, and avoid the GIGO (garbage in, garbage out) failure mode.

The semantic layer acts as a crucial translator between your business users and your data infrastructure. It maps technical data elements—like table names and column headers—to familiar business terms. For example, it translates tbl_sales_fy25_q3 into a user-friendly term like "Third Quarter Sales." This layer is the key to making data interaction intuitive.

This abstraction layer serves two primary purposes:

Power BI semantic models in Microsoft Fabric comprise the ultimate semantic layer. Here, all the data is translated into human-readable, business-friendly terminology that accords with natural language. It is also steeped in metadata, further increasing the likelihood of returning accurate answers to an LLM.

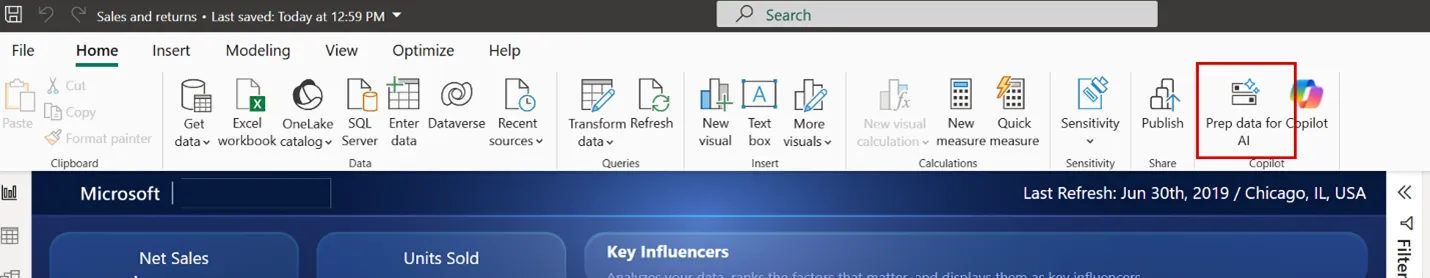

Remember the BI content we created in Step 1? In Power BI, your dashboard comes with a semantic model already built in. Since we know our Answers and Actions, we also know what tables to include in the semantic model(s), what measures they should have defined, and what metadata is essential. Microsoft calls this "preparing your data for AI."

By creating a robust semantic layer, you provide the LLM with the business context it needs to interpret user queries correctly and retrieve the right information. It bridges the gap between human language and machine data.

Time to strategize how you will integrate the Data Agent with your organization's existing approach to LLMs. Where will users find your Agent, and how will they interact with it. Importantly, what are they allowed and not allowed to ask of it?

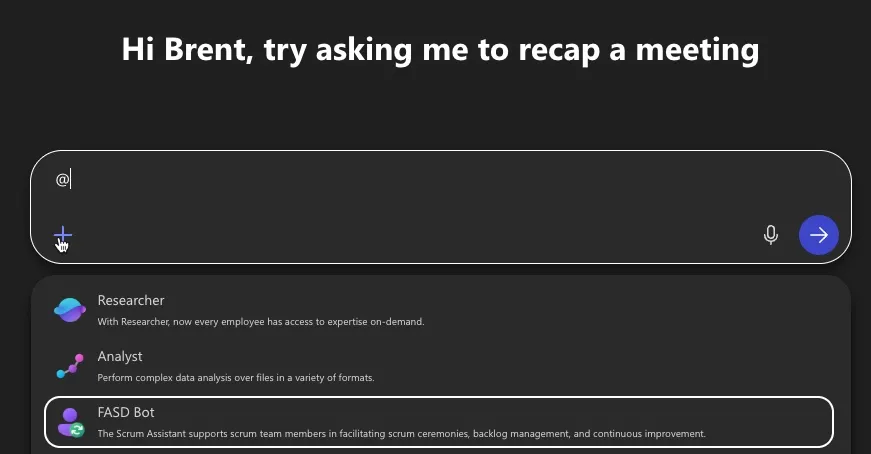

Likely, your business is already using them on some level to improve worker productivity or make business processes more efficient. What we want to do is bring the agent to the same place to make it discoverable and useful. If your company is using Microsoft Copilot 365, for example, we will plan to register our agent as an Agent in the Copilot 365 directory. If ChatGPT, then we will make and share a custom GPT.

This strategy should also consider how agents are monitored and managed. Users will be submitting unknown prompts and using those answers; we want to make sure we can monitor, audit, and log those prompts and responses. That way we can manage feedback we get from users and tune our model for accuracy.

Note - this step doesn't strictly have to come after step 3, but it is an important element to making a Data Agent successful.

Your strategy should address three core components:

A well-defined LLM strategy ensures your Conversational BI agent is intelligent, reliable, and secure, capable of understanding context and delivering precise insights.

The final step is to bring everything together by creating the Data Agent. This agent is the user-facing application—the chatbot or voice assistant—that your team will interact with.

There are many ways to approach this with the various LLM foundation models, but the most flexible approach. Most often in today's stack this is an MCP server. You configure this agent to have all the needed context it will require to answer users' question. It will also be connected to the Semantic Layer and Data Foundation that you built in steps 2 and 3.

Consider the user interface and overall experience. Will users interact via a chat window embedded in your BI portal, a mobile app, or a tool like Microsoft Teams? The goal is to make data access as seamless as possible, integrating it directly into the workflows where decisions are made.

One powerful approach is to use a framework like Microsoft Copilot with custom data agents. This allows you to build a secure, context-aware agent that leverages the power of Azure OpenAI while remaining connected to your proprietary business data. The agent should be able to:

At FirstLight, we have developed an internal agent called "FASD Bot". This Data Agent can answer questions from internal data sources, and search our Confluence-based knowledge base to recommend best practices and common solutions to data & analytics challenges.

With these foundational pieces in place, you can bring a Data Agent to life to add value in your organization. In future blog posts, we'll dive into each of these steps.

FirstLight helps our clients move forward with BI, data science, and AI. Which step do you need to work on this quarter? https://www.firstlightbi.ai/contact-firstlight